Counterfactual Explanations and Model Reconstruction

Explored how the additional information provided by counterfactual explanations can be exploited for reconstructing a surrogate model. The work was later extended to multiple directions including retrieving counterfactual explanations in private and data-efficient LLM distillation using counterfactual explanations.

Counterfactual explanations and model extraction attacks

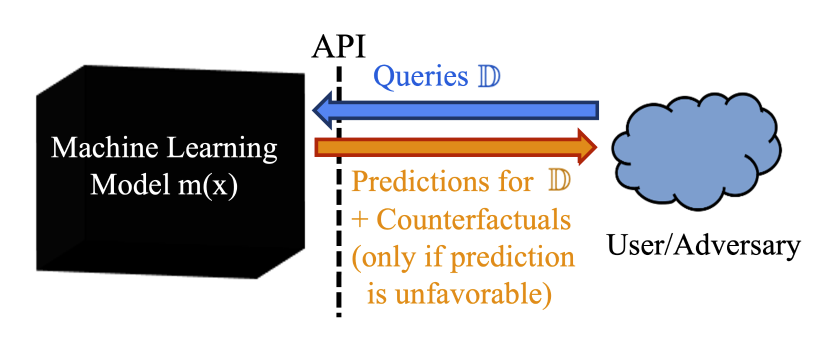

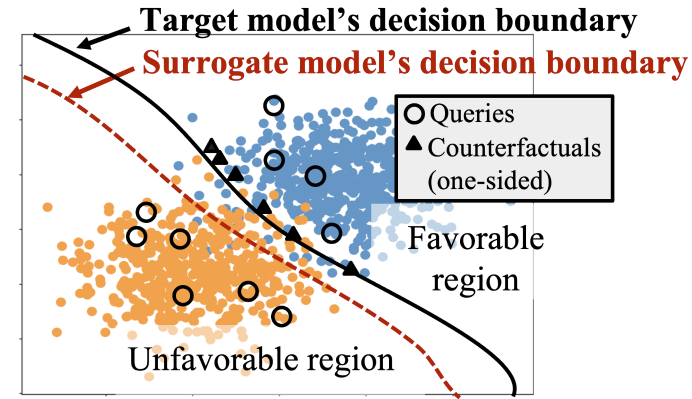

Counterfactual explanations provide guidance on achieving a favorable outcome from a model, with minimum input perturbation. However, they can also be exploited to leak information about the underlying model, causing privacy concerns. Prior work has shown that one can query for counterfactual explanations with several input instances and train a surrogate model using all the queries and their counterfactual explanations. In this project, we investigate how model extraction attacks can be improved by further leveraging the fact that the counterfactual explanations also lie quite close to the decision boundary.

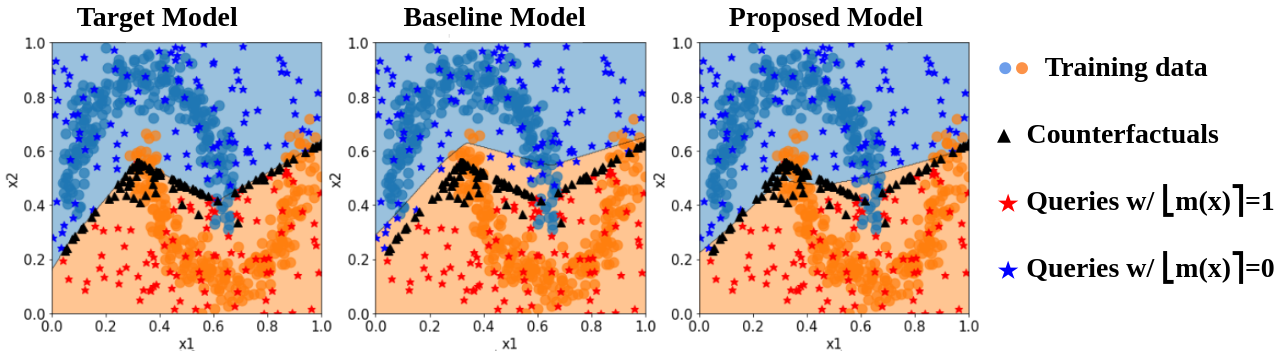

The proposed attack mitigates the issue of decision boundary shift, which is a problem that occurs in case of systems that provide counterfactual explanations for queries originating only from one side of the target model’s decision boundary.

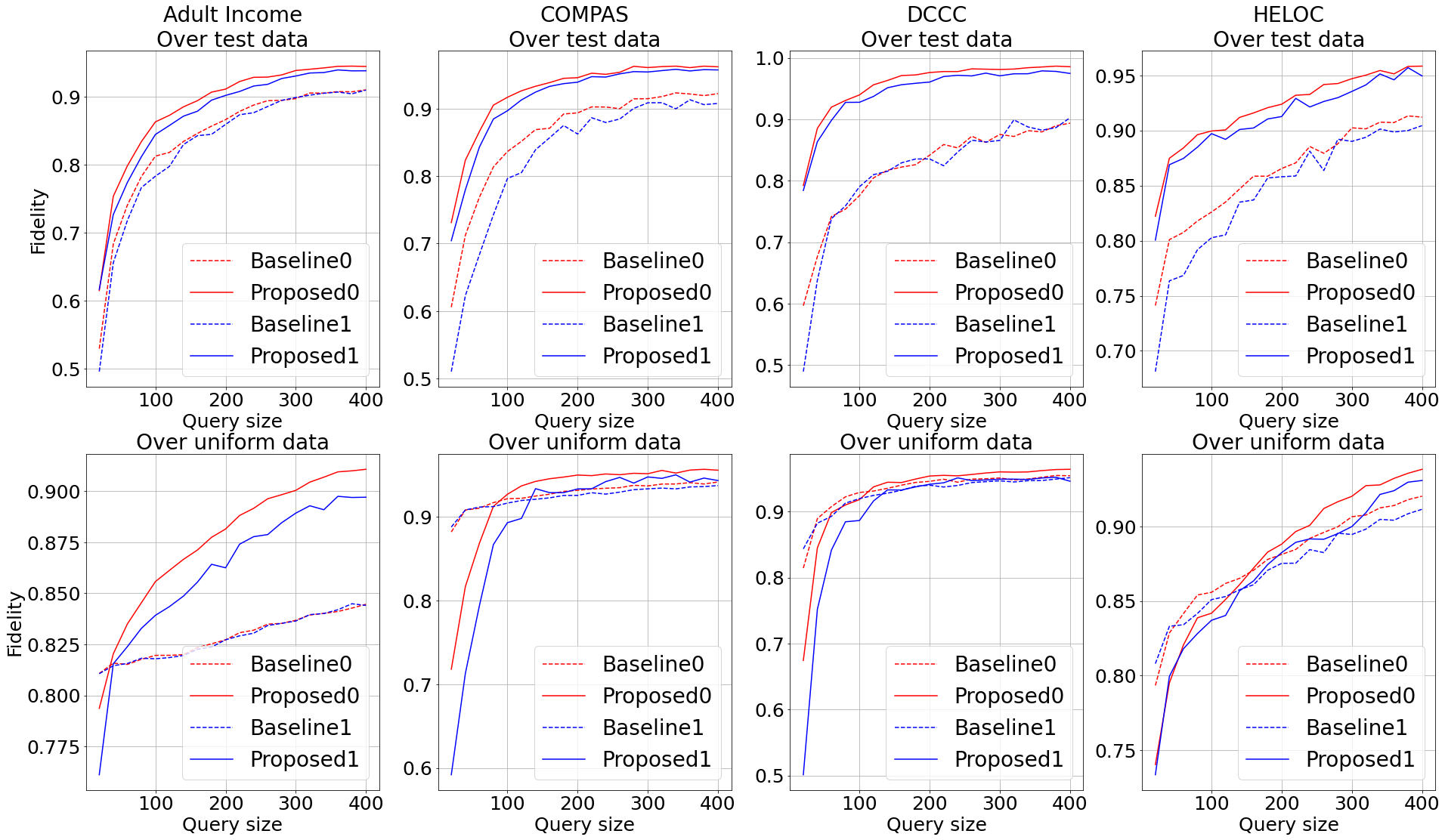

Consequently, the proposed model extraction attack surpassed the performance of the baseline attack in [1] in a wide array of experiments.

This project was supported by Northrop Grumman Corporation.

Github: https://github.com/pasandissanayake/model-reconstruction-using-counterfactuals

Counterfactual explanations for data-efficient LLM distillation

Similar to the definition of a counterfactual explanation in a tabular data manifold, a counterfactual can be defined in the context of natural language classification settings such as sentiment analysis. In this case, it can be defined as the minimum (natural-looking) perturbation that has to be made to a sentence in order for a classifier (e.g.: an LLM) to classify the new sentence into a different class than the original.

[1] Ulrich Aïvodji, Alexandre Bolot, and Sébastien Gambs, “Model extraction from counterfactual explanations,” arXiv preprint arXiv:2009.01884, 2020.

References

2025

- Few-Shot Knowledge Distillation of LLMs With Counterfactual ExplanationsIn NeurIPS, 2025

-

-